One Vendor Sneezes, and the Internet Catches a Cold

When Cloudflare stumbled today, the world stumbled with them. Not because they failed, but because we’ve built the internet on a handful of shared dependencies. This wasn’t a glitch - it was a warning about convenience, fragility, and the illusion of control.

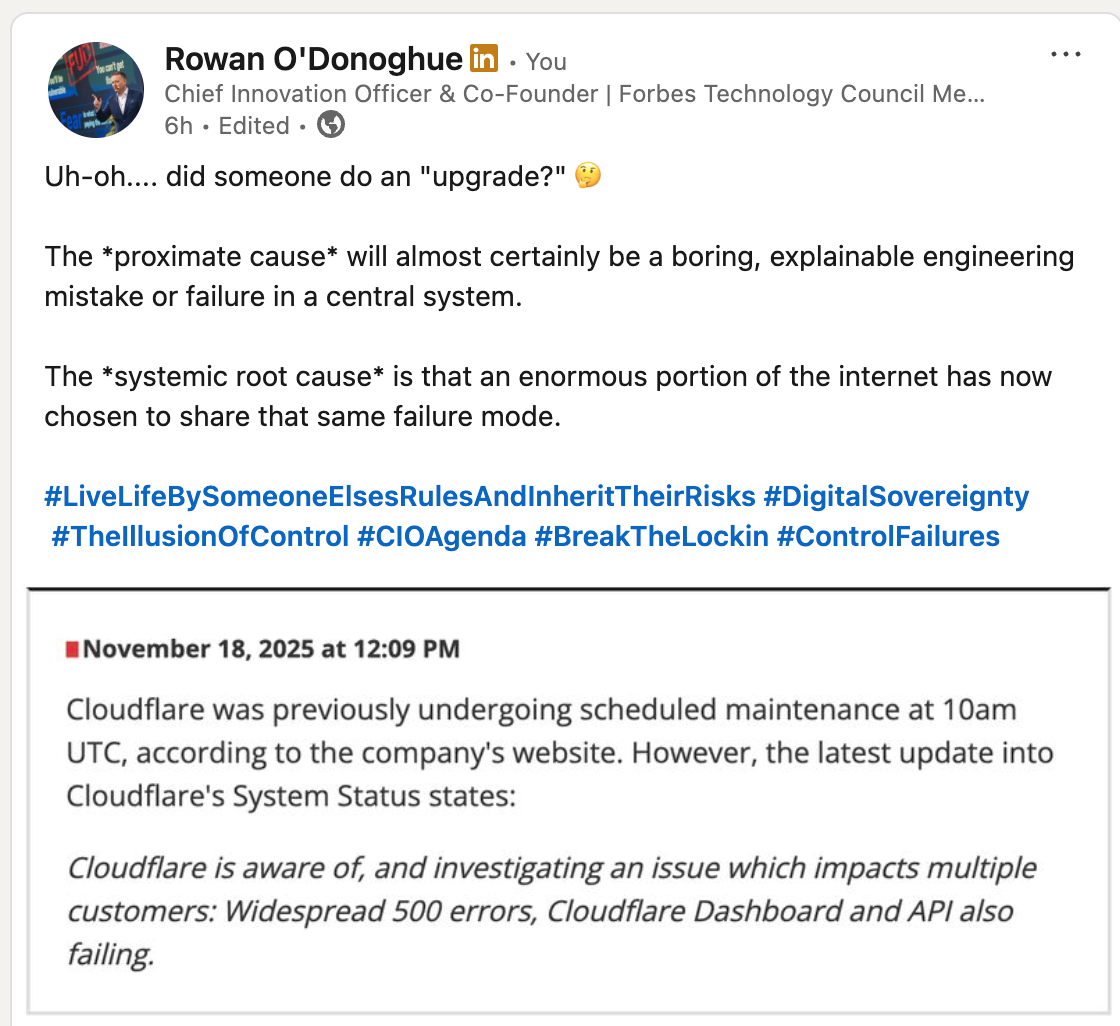

This morning, before anyone had confirmed anything, I posted a little jab on LinkedIn:

“Uh-oh… did someone do an upgrade?” 😏

It was completely tongue-in-cheek, obviously.

But let's be honest - it was also a bit of a fairly safe bet. Outages of this scale almost never start with something cinematic or catastrophic. There's no raging inferno in a datacentre. No mystery hacker in a hoodie hunched over a glowing terminal. No Bond villain stroking a cat while taking down the internet (ok, well maybe that one someday 😎).

Nope, the proximate cause is usually something far more modest than that. A misconfigured rule. A runaway update. A propagation error in a control plane.

Something so boring it’ll make engineers nod knowingly and the rest of the world shrug. I mean seriously, at one point today you couldn't even order a cheeseburger. That's when you know things are really bad!

And yet that “boring cause” triggered a synchronised global wobble - banks, retailers, airlines, SaaS platforms, government portals, you name it. Truth be told, even I was hit trying to book flights to our North American HQ; 3D Secure simply gave up and just walked off the job.

The bottom line is very simple. Cloudflare went down - briefly for a few hours - and by God did the world feel it.

It tells you something when a routine engineering glitch suddenly becomes a matter of national conversation. People instinctively recognise that something deeper is wrong, even if they can’t quite articulate exactly what it is.

And here’s the part we need to sit with:

The problem isn’t just that Cloudflare broke. The problem is that the world breaks with them.

The impact was so sharp and so immediate that the media picked it up within minutes. SME ran a piece quoting my warning about shared failure modes and mass vendor consolidation. LBC brought me on-air to unpack what had happened, and tomorrow morning I’ll be continuing that discussion on BBC World News.

The attention is because people are finally waking up to a bit of an uncomfortable truth: We’ve built a global digital civilisation on a handful of foundations that were never designed to carry this much of the world alone.

Cloudflare Isn’t the Villain - They’re the Case Study

Let me be crystal clear and say this upfront: Cloudflare are exceptional at what they do. They’ll publish a clean, transparent RCA. They’ll take responsibility and own the issue. Engineers everywhere will read it like scripture.

This isn’t about competence, but it is 100% about consequence.

When a single vendor is this good, this reliable, and this trusted, something very subtle happens. We all start building more and more of the world on top of them. Then everyone else does the same, and before long, we’ve created the single most fragile structure imaginable a universally shared foundation.

Even a 30–60 minute wobble becomes a planetary-scale failure mode.

Fragility at Internet Scale

A single dependency is normal - a clean trade-off, an engineering reality.

But a single dependency shared by millions of organisations simultaneously is something else entirely.

When that dependency stumbles, we don’t get graceful degradation - we get global synchronisation.

Everyone drops at once.

Everyone recovers at once.

And everyone acts surprised, every time!

It’s the digital equivalent of every house on Earth sharing the same electricity fuse. One overload in one neighbourhood… and kettles stop boiling everywhere!

That’s not resilience.

That’s fragility - just at an industrial scale.

My BMW Helped Illustrate This Better Than I Could

As if scripted by the universe for comedic timing and to make me look stupid, this week after returning from the Radar Summit in Sweden my brand-new BMW X5 decided to join the Cloudflare conversation.

I’m driving along and all of a sudden the instrument cluster: gone. I mean black screen (of death?). No speed. No warnings. No messages, just darkness.

Now in a “modern luxury” machine, that’s not an inconvenience - that's a flippin' safety hazard wrapped in leather.

To top it off, almost as if I inadvertently activated "Secret Agentic AI Mode" it just decides to apply the rear parking brakes with an ear-busting screeching which probably could have been heard back in Stockholm.

And why did it happen? Not because the engine broke. Not because the brakes failed. Not because the car aged (very prematurely!). Nope, one tiny software component decided to take a day off and drag the entire system down with it. (separate article on this inbound!)

Modern engineering: built on interdependence, sabotaged by the very same interdependence.

And that is exactly the dynamic Cloudflare exposed today.

The Dependency We Pretend Isn’t There

One of the comments on my LinkedIn post from Chris Collotti captured the real issue:

“We’re all dependent on something.”

True. None of us can farm our own food, forge our own steel, or - much to the disappointment of my inner teenager - build our own Zero Trust stack in the shed beside the lawnmower like what I know Chris is really trying to do ;)

Dependency isn’t the enemy. Involuntary dependency is.

The kind you really didn’t choose. The kind you inherited because “everyone else was using it, so it must be safe - right?". The kind nobody architected for, challenged, or even questioned.

That’s where fragility hides - quietly, invisibly - until a morning like today.

The Leadership Responsibility

This is the part CIOs, CTOs, architects, and digital leaders must really internalise.

Resilience is not measured in uptime. Resilience is measured in optionality.

You can’t outsource that responsibility to a vendor - no matter how brilliant they are.

Leaders must really be asking:

- Where are the single points of failure in our architecture?

- Are they obvious… or are they hiding inside vendor black boxes?

- If a dependency fails, do we degrade gracefully - or do we face-plant?

- Have we designed for sovereignty, or merely convenience?

- Have we built escape hatches - or have we locked ourselves into someone else’s destiny?

Today wasn’t Cloudflare’s failure. Today was our reminder.

The Vendor-Led Internet & The Illusion of Control

For a decade we’ve outsourced: routing, identity, security, performance, observability, and in some cases our entire digital nervous system.

We convinced ourselves it made things “simpler.” What it really did was make things uniform.

Uniform architectures.

Uniform dependencies.

Uniform vulnerabilities.

Uniform failure modes.

And let's be honest, uniform is the opposite of resilient. We didn’t simplify the internet - we consolidated it. We didn’t reduce risk - we concentrated it.

And perhaps most importantly, we didn’t gain control - we just gave it away.

So, Where Do We Go From Here

Cloudflare have already fixed the outage. They’re that good.

The bigger question is whether we will fix the architecture. This event won’t be remembered for the failure (until the next one!).

It’ll be remembered (I hope) for the wake-up call which is: Resilience is not about preventing outages. It’s about ensuring the world doesn’t collapse when they happen.

Today reminded us of something uncomfortable but necessary.

Convenience is not a strategy. Vendor trust is not the same as vendor independence, and the internet is only as strong as the foundations we choose to build it on.

We don’t need a more reliable internet. We need a more sovereign one.